Major AI foundation models like ChatGPT, Claude, Bard and LlaM-A-2 are becoming less transparent, according to a team of Stanford University researchers.

The new study comes from the university’s Center for Research on Foundation Models (CRFM), part of the Stanford Institute for Human-Centered Artificial Intelligence (HAI), which studies and develops foundational AI models.

“Companies in the foundation model space are becoming less transparent.” said Rishi Bommasani, Society Lead at CRFM, according to an official press release. This opacity poses challenges for businesses, policymakers, and consumers alike.

The companies behind the most accurate and used LLMs all agree they want to do good, but have different views regarding openness and transparency.

OpenAI, for example has embraced the lack of transparency as a cautionary measure.

“We now believe we were wrong in our original thinking about openness, and have pivoted from thinking we should release everything to thinking that we should figure out how to safely share access to and benefits of the systems,” an official blog reads.

MIT research from 2020 shows that this has been a core philosophy for a while. “Their accounts suggest that OpenAI, for all its noble aspirations, is obsessed with maintaining secrecy, protecting its image, and retaining the loyalty of its employees,” the researchers wrote.

For its part, Anthropic’s core views on AI safety show the startup’s comitment to “treating AI systems that are transparent and interpretable,” and its focus on setting “transparency and procedural measures to ensure verifiable compliance with (its) commitments.” Also, in August of 2023, Google announced the launch of a Transparency Center to better disclose its policies and tackle this issue.

But why should users care about AI transparency?

“Less transparency makes it harder for other businesses to know if they can safely build applications that rely on commercial foundation models; for academics to rely on commercial foundation models for research; for policymakers to design meaningful policies to rein in this powerful technology; and for consumers to understand model limitations or seek redress for harms caused,” Stanford’s paper argues.

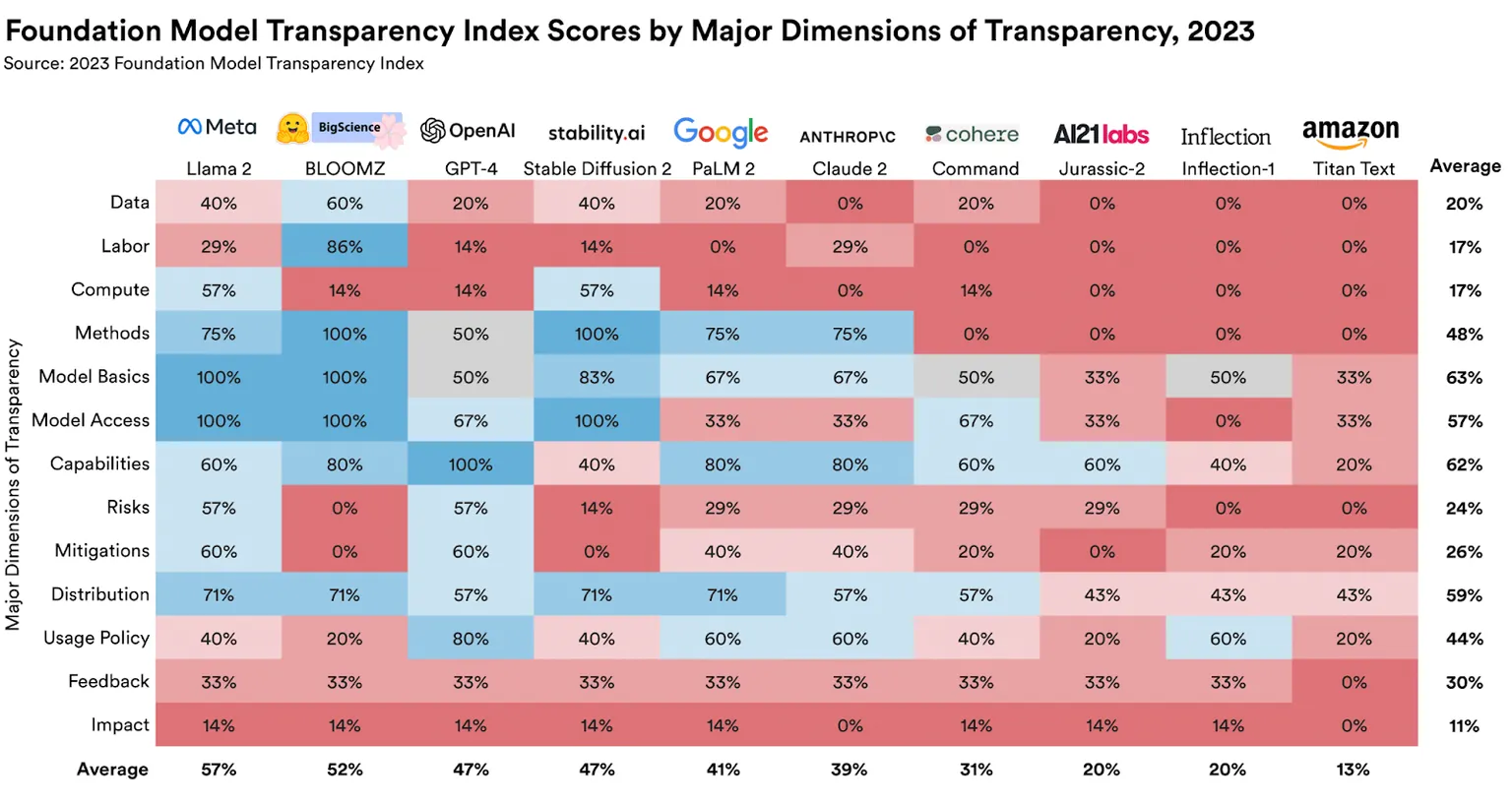

To address this, Bommasani and a team from Stanford, MIT, and Princeton devised the Foundation Model Transparency Index (FMTI). The index evaluates a wide set of topics that provide a comprehensive view on how transparent companies are when it comes to their AI models. The ranking studies aspects like how a company builds a foundation model, the availability of its dataset, how it works, and how it is used downstream.

The results were less than stellar. The highest scores ranged from 47 to 54 on a scale of 0 to 100, with Meta’s Llama 2 leading the pack. OpenAI has 47% of transparency, Google just 41% and Anthropic scored 39% for their transparency.

Source: Stanford Center for Research on Foundation Models

Source: Stanford Center for Research on Foundation Models

The distinction between open-source and closed-source models also played a role in the rankings.

“In particular, every open developer is nearly at least as transparent in terms of aggregate score as the highest-scoring closed developer,” the team at Stanford University wrote in an extensive research paper. For non-scientists, that means the worst open-source AI model is more transparent than the best closed-source models.

Transparency in AI models isn’t just a matter of academic interest. The need for properly transparent AI development has been voiced by policymakers globally.

“For many policymakers in the EU as well as in the U.S., the U.K.,China, Canada, the G7, and a wide range of other governments, transparency is a major policy priority,” said Bommasani.

The broader implications of these findings are clear. As AI models become more integrated into various sectors, transparency becomes paramount. Not only for the sake of ethical considerations, but also for practical applications and trustworthiness.

Edited by Stacy Elliott.

Stay on top of crypto news, get daily updates in your inbox.

Source: https://decrypt.co/202555/ai-model-transparency-getting-worse-stanford-researchers