Huawei debuted its new artificial intelligence (AI) storage model, the OceanStor A310, at GITEX GLOBAL 2023 last week, marking an attempt to address certain industry challenges surrounding large model applications. Designed for the era of large AI models, the OceanStor A310 aims to provide a storage solution for basic model training, industry model training, and inference in segmented scenario models.

Imagine the OceanStor A310 as a super-efficient librarian in a vast digital library, quickly fetching pieces of information. In comparison, another system—IBM’s ESS 3500—is a slower librarian. The faster the OceanStor A310 can fetch information, the quicker AI applications can work, making smart decisions in a timely manner. This speedy access to information is what makes Huawei’s OceanStor A310 stand out.

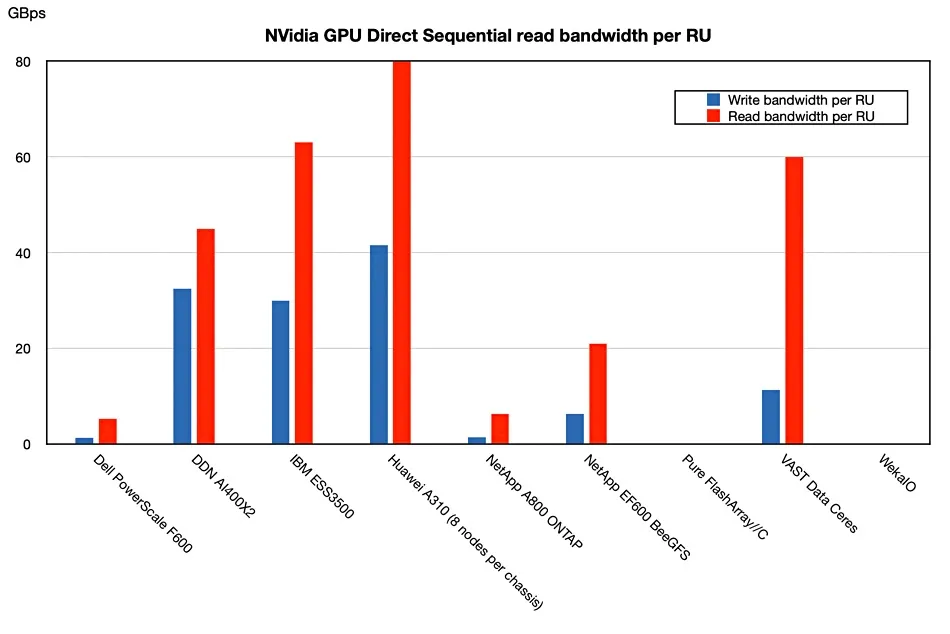

The OceanStor A310’s edge seems to lie in its ability to speed up data processing for AI. When compared to IBM’s ESS 3500, Huawei’s latest all-flash array reportedly feeds Nvidia GPUs almost four times faster on a per-rack unit basis. That’s per the methodology using Nvidia’s Magnum GPU Direct, wherein data is sent directly from an NVMe storage resource to the GPUs without a storage host system being involved.

Huawei’s OceanStor A310 demonstrated a performance with up to 400GBps sequential read bandwidth and 208GBps write bandwidth. However, the impact of open-source and closed-source frameworks on these numbers remains unclear.

Diving into its mechanism, the OceanStor A310 is designed as a deep learning data lake storage solution that potentially offers unlimited horizontal scalability and high performance for mixed workloads.

“We know that for AI applications, the biggest challenge is to improve the efficiency for AI model training,” Evangeline Wang, Huawei Product Management and Marketing, said in a statement shared by tech outlet Blocks and Files. “The biggest challenge for the storage system during the AI training is to keep feeding the data to the CPU, to the GPUs,” she added. “That requires the storage system to provide best performance.”

To tackle this issue, each OceanStor can support up to 96 NVMe SSDs, processors, and a memory cache. Users can cluster up to 4,096 A310’s, sharing a global file system that supports standard protocols for applications. The OceanStor A310 aims to minimize data transmission time through SmartNICs and a massively parallel design.

“Huawei’s A310, with its small nodes, was fastest overall at both sequential reading and writing, with its 41.6/80GBps sequential write/read bandwidth vs IBM’s 30/63GBps numbers.” Block and Files said in a benchmark study that compared Huawei’s solution with its direct competitors.

Image: Blocks and Files

Image: Blocks and Files

The unveiling of the OceanStor A310 comes at a time when the AI industry seeks efficient data storage and processing solutions. This effort by Huawei aims to address some of the current challenges and may contribute towards achieving streamlined AI model training.

However, United States sanctions on Huawei—primarily due to concerns regarding national security and the company’s alleged ties with the Chinese government—adds a layer of complexity to Huawei’s venture into AI storage solutions.

The potential implications of Huawei’s OceanStor A310 could be notable. By offering a solution to some of the current inefficiencies in data storage and processing, Huawei is attempting to challenge other suppliers and nudging the AI industry towards a new era of possible innovation and efficiency.

Edited by Andrew Hayward

Stay on top of crypto news, get daily updates in your inbox.

Source: https://decrypt.co/202568/huawei-unveils-oceanstor-a310-speedy-storage-solution-ai-model-trainers