In the ever-evolving world of tech, Elon Musk’s endeavors seldom fail to make waves. A few weeks after his clarion call to halt the training of LLMs more capable than GPT-4, Musk played his signature move: doing precisely the opposite of what he preached. Enter xAI—Musk’s latest brainchild—and Grok, its brand new LLM announced on Saturday.

Announcing Grok!

Grok is an AI modeled after the Hitchhiker’s Guide to the Galaxy, so intended to answer almost anything and, far harder, even suggest what questions to ask!

Grok is designed to answer questions with a bit of wit and has a rebellious streak, so please don’t use…

— xAI (@xai) November 5, 2023

The venture made headlines, not just because of Musk’s involvement but also due to the stellar line-up of top AI researchers that the company managed to recruit from leading startups and tech giants. Promoted with the tantalizing allure of an AI designed to “understand the world,” xAI remained mysteriously coy about the “how” and the “what” of its operations… at least until last week.

Is this another classic Musk move to challenge the status quo, or just an overhyped chatbot riding the massive wave of popularity and hype surrounding its predecessors?

The Good

A constant stream of real-time knowledge

Having access to the entire Twitter firehose of content makes Grok a potential game-changer. As xAI highlighted, Grok will have a “real-time knowledge of the world,” presenting what AI researchers call Reinforcement Learning from Human Feedback (RLHF), processing news and diverse commentary about current events.

By learning about events from various viewpoints, and ingesting Twitter community notes—which are essentially annotations—Grok will benefit from a multifaceted view of the world.

According to recent studies, people have already shifted their behavior to look for news on social media first before turning to mainstream media. Grok’s integration with Twitter could further expedite this process, offering users immediate comments, context, and—if executed well—on-the-spot fact-checking. The real-time knowledge feature, as xAI highlighted, ensures that Grok remains updated with the pulse of the world, enabling it to provide timely and relevant responses.

Fun Mode: Elon’s dream made real

Elon Musk’s vision of a fun-loving AI seems to have been brought to life with Grok’s so-called “Fun Mode.” This feature allows the LLM to craft jokes, deliver humorous yet factually accurate responses, and provide users with a whimsical and casual conversational experience.

One of the challenges with existing LLMs, like ChatGPT, is that some users feel they’ve been overly sanitized to ensure political correctness, potentially making interactions less organic and spontaneous. Additionally, some localized LLMs aren’t adept at prolonged interactions. Grok, with its fun mode, promises to fill this gap, potentially serving as an engaging time-waster for those looking to unwind.

Grok has real-time access to info via the 𝕏 platform, which is a massive advantage over other models.

It’s also based & loves sarcasm. I have no idea who could have guided it this way 🤷♂️ 🤣 pic.twitter.com/e5OwuGvZ3Z

— Elon Musk (@elonmusk) November 4, 2023

This concept isn’t entirely new, as Quora’s Poe offers a similar service with its fine-tuned chatbots, each boasting a unique personality. However, having this embedded in an LLM with Grok’s capabilities takes the experience to a new level.

Native internet access

One of Grok’s other differentiators is the ability to access the internet without requiring a plug-in or other module.

While the exact scope of its browsing capabilities remains to be clarified, the idea is tantalizing. Imagine an LLM that can improve factual accuracy because it can cross-reference data in real-time. Coupled with its access to Twitter content, Grok could revolutionize how users interact with AI, knowing that the information they receive is not just based on pre-existing training data but is continually updated and verified.

Multitasking

Grok is reportedly capable of multitasking, allowing users to carry out multiple conversations concurrently. Users can explore various topics, wait for a response on one thread, and continue with another.

The chatbot also offers branching in conversations, letting users dig deeper into specific areas without disrupting the main discussion, offering a visual guide to all conversation branches, making it easy for users to navigate between topics.

Grok also offers a built-in markdown editor, which lets users download, edit, and format Grok’s responsesfor later use. This tool, combined with branching, ensures users can work with specific conversation branches and re-engage seamlessly.

These are some of the UI features in Grok. First, it allows you to multi-task. You can run several concurrent conversations and switch between them as they progress. pic.twitter.com/aXAG0M2oPF

— Toby Pohlen (@TobyPhln) November 5, 2023

Minimal censorship: a free-speaking AI

Elon Musk’s vision for Grok was clear: an AI that doesn’t shy away from speaking its digital mind.

While all major AI chatbots have guardrails in place to avoid potential harm or misinformation, it can sometimes feel restrictive. Users have noted instances where models like ChatGPT, Llama, and Claude might hold back responses, erring on the side of caution to avoid potential offense. However, this may filter out answers that are benign or genuinely sought after.

Grok is being allowed more freedom in its responses, and thus may potentially offer a more authentic and unrestricted conversational experience. As highlighted by xAI, Grok’s design empowers it to address spicy questions that other AI systems might sidestep.

Announcing Grok!

Grok is an AI modeled after the Hitchhiker’s Guide to the Galaxy, so intended to answer almost anything and, far harder, even suggest what questions to ask!

Grok is designed to answer questions with a bit of wit and has a rebellious streak, so please don’t use…

— xAI (@xai) November 5, 2023

It’s evident that this AI offers a unique blend of real-time information, humor, accuracy, and freedom. However, as with any innovation, there are challenges and potential pitfalls to consider as well.

The Bad

Rushed development and limited training

From the get-go, Grok’s rapid development raised some eyebrows. As stated by xAI, “Grok is still a very early beta product—the best we could do with two months of training.” In the world of LLMs, two months and 33 billion parameters sound like a drop in the bucket.

For perspective, OpenAI has been transparent about its developmental process, mentioning, “We’ve spent six months iteratively aligning GPT-4.” The disparity in developmental timelines suggests that Grok’s development may have been rushed to ride the AI hype wave.

Moreover, x.AI remains tight-lipped about the extent of hardware utilized during Grok’s training, leaving room for speculation.

All about the parameters

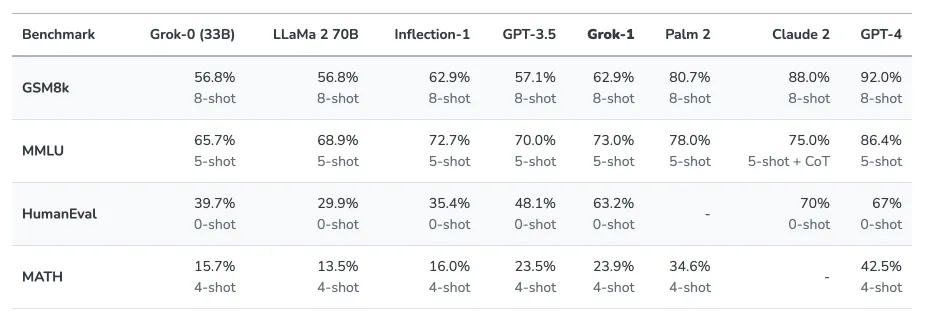

To the uninitiated, parameters in LLMs represent the amount of information or knowledge the model can hold. They indicate the effective brain capacity of the AI, determining its ability to process and generate information. Grok, with its 33 billion parameters, might sound impressive at first glance.

However, in the competitive LLM landscape, it’s just another player. In fact, its parameter count might fall short in powering intricate corporate needs and the high-quality outputs that titans like ChatGPT, Claude, and Bard have set as the gold standard.

Part of this low parameter count is the reason why Grok fails to beat other mainstream models in key benchmarks like HumanEval or MMLU:

Source: x.AI

Source: x.AI

Besides the parameter count, there’s also the issue of context handling —basically how much information an AI chatbot can understand in one input. Grok is not especially impressive in this area. According to xAI, Grok understands 8,192 tokens of context, but GPT-4 juggles a whopping 32,000, and Claude takes it even further with up to 100,000 tokens. OpenAI’s new GPT-4 Turbo reaches a 128,000 token context window.

The price of innovation

Cost is a crucial factor when evaluating the value of any product, and Grok is no exception. The chatbot will be available for users willing to pay $16 a month for the privilege of interacting with it.

With free offerings like Claude 2 and ChatGPT equipped with GPT-3.5 Turbo, Grok may be a hard sell—especially considering that these free models have been touted for their accuracy, already outpacing Grok in certain benchmarks.

Furthermore, even the most potent LLM on the block, GPT-4, promises to be better than Grok, with the added advantage of being widely accessible, multimodal, and powerful.

Could Grok’s introduction be largely a strategic move to boost subscriptions for Twitter Blue, thereby amplifying Twitter’s revenue stream?

These concerns highlight Grok’s challenges in establishing itself as a major player in the LLM domain. And its downsides don’t stop at the price tag.

The Ugly

Imitation of fiction

Basing an LLM on a fictional character from a popular novel is, without a doubt, a creative choice. While the charm of a fictional personality might be alluring, it poses inherent risks in a world that increasingly relies on accurate information. Users who turn to AI for serious queries or advice might end up at odds with a system designed to emulate a comedic character.

Furthermore, as the line between fiction and reality blurs, there’s a concern about users misconstruing playful or satirical responses for factual information. In a digital age, where every piece of information is dissected and shared, the ramifications of such misconceptions could be widespread. Especially when more than one language comes into the picture.

While humor and wit have their place, it’s essential to strike a balance, especially when users seek critical insights. Prioritizing humor over accuracy might entertain, but it also undermines the very essence of what an LLM should offer: reliable information.

Overpromised and underdelivered

Elon Musk’s grand promises about Grok have set the stage for sky-high expectations. Digging deeper reveals a potential mismatch between the hype and reality. The fact that traditional LLM training methods are constrained by their training data underscores a critical limitation: they can’t genuinely venture into “super AI” territory.

Grok’s training, with its 33 billion parameters and a couple of months of development, seems dwarfed when compared with other LLM giants. While the idea of a playful, fictional personality sounds enticing, expecting it to deliver groundbreaking results using standard training methods might be a stretch.

The AI community is no stranger to exaggeration, but with the rapid advancements in the field, it’s crucial for users to sift through the hype. Achieving a “super AI” status is a monumental challenge, and it’s unlikely that Grok, with its current configuration and training, will qualify.

Indeed, to prove Grok’s power, Elon Musk compared its conversational chatbot with a small LLM trained for coding. Suffice it to say, it was not a fair fight.

The menace of misinformation

LLMs are powerful, but they aren’t infallible. In the absence of rigorous standards, discerning fact from fiction becomes a Herculean task. Recent history offers cautionary tales, like chatbots trained on 4chan data or even Tay, an earlier chatbot from Microsoft that was allowed to interact on Twitter. These bots not only spewed hate speech, but have also masqueraded convincingly as a real person, fooling a vast online audience.

This flirtation with misinformation isn’t isolated. With Twitter’s image taking hits since Elon’s takeover, there may be concerns about Grok’s ability to deliver accurate information consistently. LLMs occasionally fall prey to hallucinations, and if these distortions are consumed as truths, the ripple effects can be alarming.

The potential for misinformation is a ticking time bomb. As users increasingly lean on AI for insights, misinformation can lead to flawed decision-making. For Grok to be a trusted ally, it must tread carefully, ensuring its playful demeanor doesn’t cloud the truth.

Missing multimodal capabilities?

In the burgeoning world of AI, Grok’s text-only approach feels like a relic of the past. While users are expected to pay for Grok’s services, they might rightly question why, especially when other LLMs are offering richer, multimodal experiences.

For instance, GPT-4-v has already made strides in the multimodal domain, boasting the ability to hear, see, and speak. Google’s upcoming Gemini promises a similar suite of features. Against this backdrop, Grok’s offerings seem lackluster, raising more questions about its value proposition.

It’s a competitive market, and users are becoming increasingly discerning. If Grok wishes to carve a niche for itself, it needs to offer something truly exceptional. As it stands, with competitors offering enhanced features and better accuracy—often for free—Grok has its work cut out for it.

Conclusion

Grok’s launch has sparked excitement but also raised a fair amount of skepticism. Its barebones MVP (minimum viable product) approach allows for rapid iteration and improvement based on user feedback. But competition from AI giants with vastly more resources poses an uphill battle.

For Grok to succeed, it needs capabilities that are both novel and useful. Mere entertainment value will not suffice in a crowded market. The AI folks don’t get distracted by cute dog memes.

In the end, Grok’s fate depends on balancing innovation and practicality. While healthy skepticism is fair, though, writing it off completely could be premature. Grok may yet pioneer new frontiers or end up a footnote in AI’s evolution. Either way, its unconventional origins guarantee Grok will be an intriguing chapter in the unfolding story of artificial intelligence.

Edited by Ryan Ozawa.

Stay on top of crypto news, get daily updates in your inbox.

Source: https://decrypt.co/204584/the-good-the-bad-and-the-ugly-of-grok-elon-musks-new-ai-chatbot