Curiosity about Meta’s next big move is reaching a fever pitch in the race to dominate the artificial intelligence landscape. With its Llama 2 generative text model—released in July—well established in the marketplace, AI watchers are hungrily searching for signs of Llama 3.

If industry murmurs are to be believed, the tech titan’s sequel to its open-source success could arrive in early 2024.

Meta has not officially confirmed the rumors, but Mark Zuckerberg recently shed some light on what could be the future of Meta’s LLMs (large language models), starting with an acknowledgment that Llama 3 is in the works. But, he said, the new foundational AI model is still on the back burner while the priority remains fine-tuning Llama 2 to make it more consumer friendly.

“I mean, there’s always another model that we’re training,” he said in a podcast interview that focused on the intersection between AI and the metaverse, “We trained Llama 2, and we released it as an open-source model, and right now the priority is building that into a bunch of consumer products…

“But yeah, we’re also working on the future foundation models, and I don’t have anything new or news on that,” he continued. “I don’t know exactly when it’s going to be ready.”

While Meta has not officially confirmed the rumors, patterns in development cycles and hefty hardware investments hint at a looming launch. Llama 1 and Llama 2 saw six-month intervals in training, and if this cadence holds, the new Llama 3—speculated to be on par with OpenAI’s GPT-4—could be launched in the first half of 2024.

Adding depth to the speculation, Reddit user llamaShill has put forth a comprehensive analysis of Meta’s historical model development cycles.

Llama 1’s training spanned from July 2022 to January 2023, with Llama 2 following suit until July 2023, the user proposed, setting a plausible stage for Llama 3’s training from July 2023 to January 2024. These insights dovetail with the narrative of a Meta that is relentlessly pursuing AI excellence, eager to showcase its next advancement that could stand shoulder to shoulder with GPT-4’s capabilities.

Meanwhile, tech forums and social media are abuzz with discussions on how this new iteration could re-establish Meta’s competitive edge. The tech community has also pieced together a likely timeline from the crumbs of information available.

Overheard at a Meta GenAI social:

“We have compute to train Llama 3 and 4. The plan is for Llama-3 to be as good as GPT-4.”

“Wow, if Llama-3 is as good as GPT-4, will you guys still open source it?”

“Yeah we will. Sorry alignment people.”

— jason (@agikoala) August 25, 2023

Add to that a bit of Twitter heresay: a conversation reportedly overheard at a “Meta GenAI” social, later tweeted by OpenAI researcher Jason Wei. “We have the compute to train Llama 3 and 4,” an unidentified source said, according to Wei—going on to affirm that it would also be open-sourced.

Meanwhile, the company’s partnership with Dell—offering Llama 2 on-premises for enterprise users—underscores its commitment to control and security over personal data, a move that is both strategic and indicative of the times. As Meta gears up to stand toe-to-toe with giants like OpenAI and Google, this commitment is critical.

Meta is also infusing AI into many of its products, so it makes sense for the company to up its stakes not to be left behind. Llama 2 powers Meta AI, and other services like Meta’s chatbots, Meta generative services, and Meta’s AI glasses, to name a few.

Amidst this whirlwind of speculation, Mark Zuckerberg’s musings on open-sourcing Llama 3 have only served to intrigue and mystify. “We would need a process to red team this, and make it safe,” Zuckerberg shared during a recent podcast with computer scientist Lex Fridman.

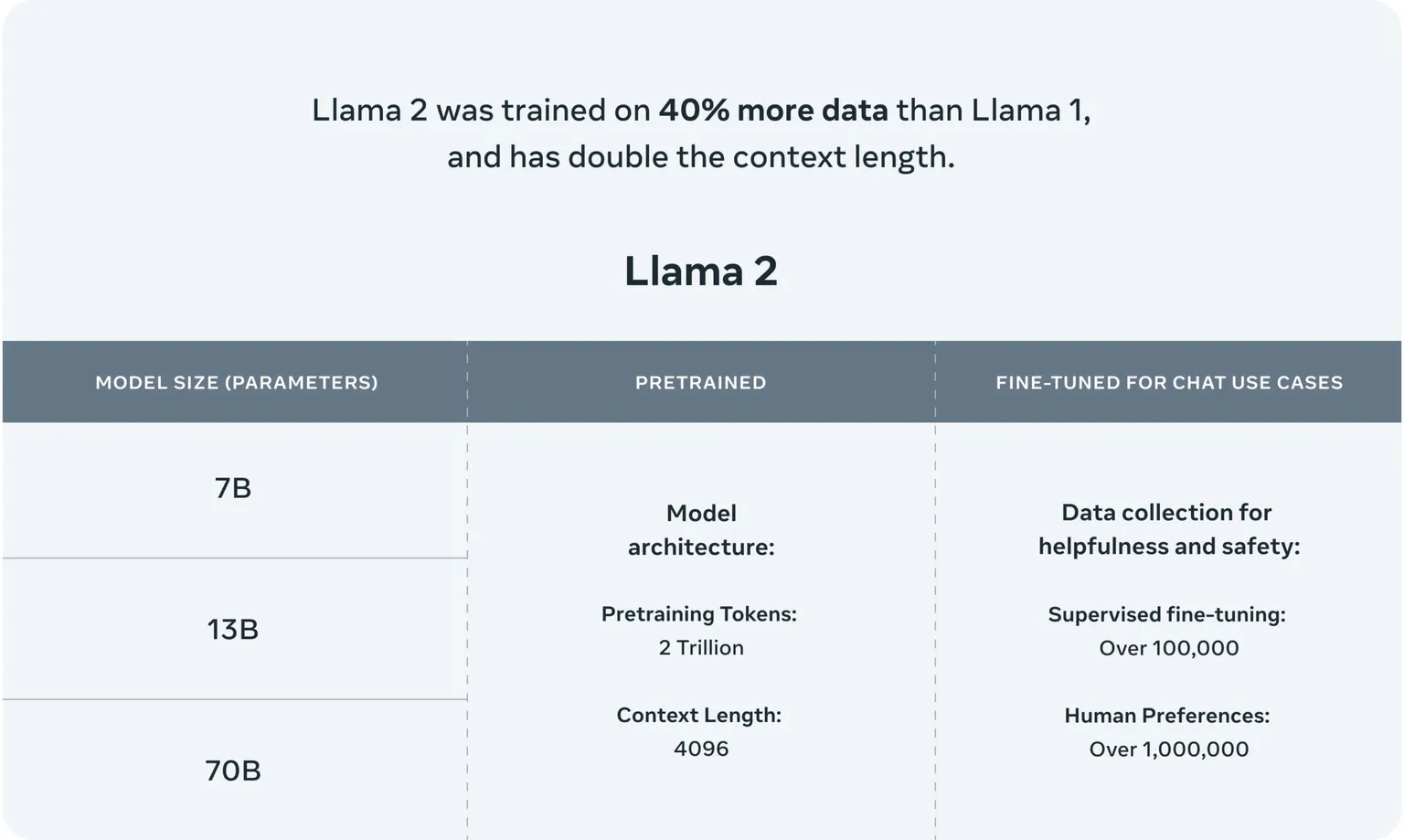

Llama 2 boasts a multi-tiered architecture with versions offering 7 billion, 13 billion, and a robust 70 billion parameters, each suited for varying levels of complexity and computational power. Parameters in LLMs serve as the neural building blocks that define the model’s ability to understand and generate language, with the number of parameters often correlating with the sophistication and potential output quality of the model.

The AI powerhouse has been trained on an extensive corpus of 2 trillion tokens, underpinning its ability to navigate and generate human-like text across a wide array of subjects and contexts.

Image courtesy of Meta

Image courtesy of Meta

In the background, the hardware groundwork is also being laid. As reported by Decrypt, Meta is stocking a data center with Nvidia H100s, one of the most powerful pieces of hardware for AI training—a clear sign that the wheels are well in motion.

Yet, for all the excitement and speculation, the truth remains shrouded in corporate secrecy.

Meta’s intentions to compete in the AI space are largely shaped by required training times, hardware investments, and the open-source question. In the mean time, anticipation is as palpable as a 2024 release of Llama 3 is probable.

Edited by Ryan Ozawa.

Stay on top of crypto news, get daily updates in your inbox.

Source: https://decrypt.co/205436/llama-3-release-date-rumored-meta-ai-challenger