A startup focused on building custom AI models for big businesses has announced the public launch of Reka Core, a multimodal language model capable of processing text, image, video, and audio inputs.

Enterprise software firm Reka AI was founded in 2022 by researchers from Google’s DeepMind, Chinese tech giant Baidu, and Meta. It’s already raised $60 million in funding from investors including New York Life Ventures, Radical Ventures, Snowflake Ventures, DST Global, and investor entrepreneur Nat Friedman.

Reka Core is the company’s largest and most capable model to date. And Reka AI—referencing its own tests—says it stands up well against many much larger, well-funded models. In a research paper aggregating the results of several synthetic benchmarks, Reka claims its Core model can compete with AI tools from OpenAI, Anthropic, and Google.

One of the key metrics is the MMMU, the Massive Multi-discipline Multimodal Understanding and Reasoning benchmark. It’s a dataset designed to test the capabilities of large language models (LLMs) in multimodal understanding and reasoning at a level comparable to human experts.

“Core is comparable to GPT-4V on MMMU, outperforms Claude-3 Opus on our multimodal human evaluation conducted by an independent third party, and surpasses Gemini Ultra on video tasks,” Reka AI said in its research paper. “On language tasks, Core is competitive with other frontier models on well-established benchmarks.”

When it comes to understanding video input, measured with the Perception-Test benchmark, Core beats Gemini Ultra, the only other video-capable model.

Image: Reka AI

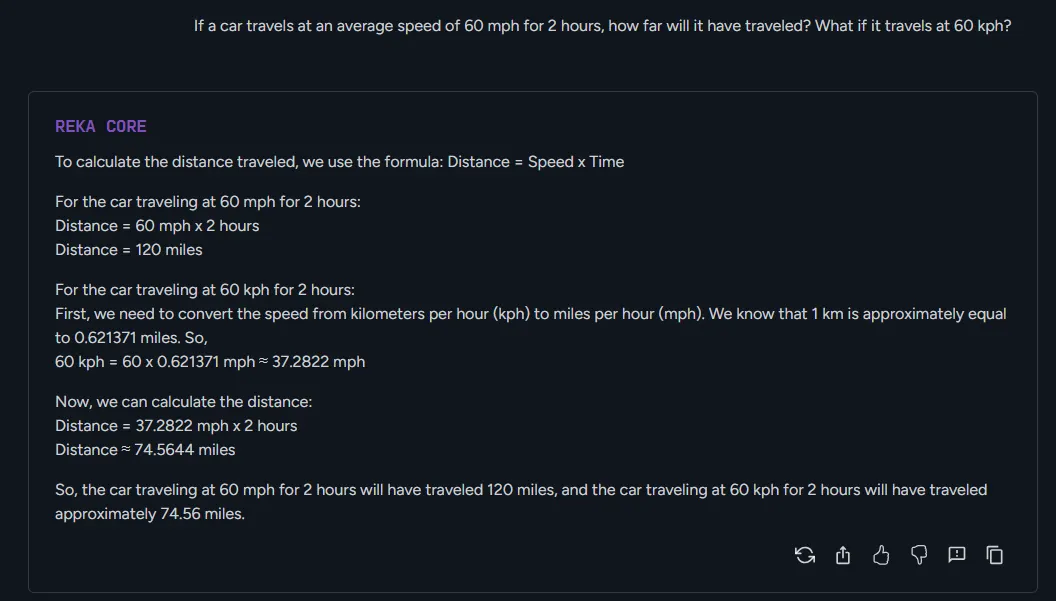

Overall, Reka Core has multimodal (image and video) capabilities, good reasoning abilities, can generate code, and has multilingual fluency. The chatbot interface is free to use, and Reka Core is also available via API. For API access, developers can expect to pay $10 for every 1 million tokens and $25 for the same number of output tokens.

The model, however, struggles with long prompts. Its free version handles only 4,000 tokens of context for efficiency reasons—with an extended context of up to 128,000 tokens, according to Reka. Competitive models from OpenAI, Anthropic, and Google have a standard context window of 128,000 with experimental versions handling up to 1 million tokens.

Reka Core was trained from scratch on thousands of GPUs over several months. The company said they used textual data from 32 languages, making it fluent in English as well as several Asian and European languages. Its developers say it also ingested some multilingual training from a Wikipedia dataset containing 110 languages, giving it limited basic knowledge of many uncommon languages and dialects.

Reka Core is available for free testing, but is not open source. Reka AI says it is working with many leading global technology platforms and government organizations such as Snowflake, Oracle, and AI Singapore.

Testing Reka

Decrypt put Reka Core to the test, through its public chatbot interface. It looks pretty similar to that of ChatGPT, using a dark-mode display with blue and purple highlights.

Visual capabilities

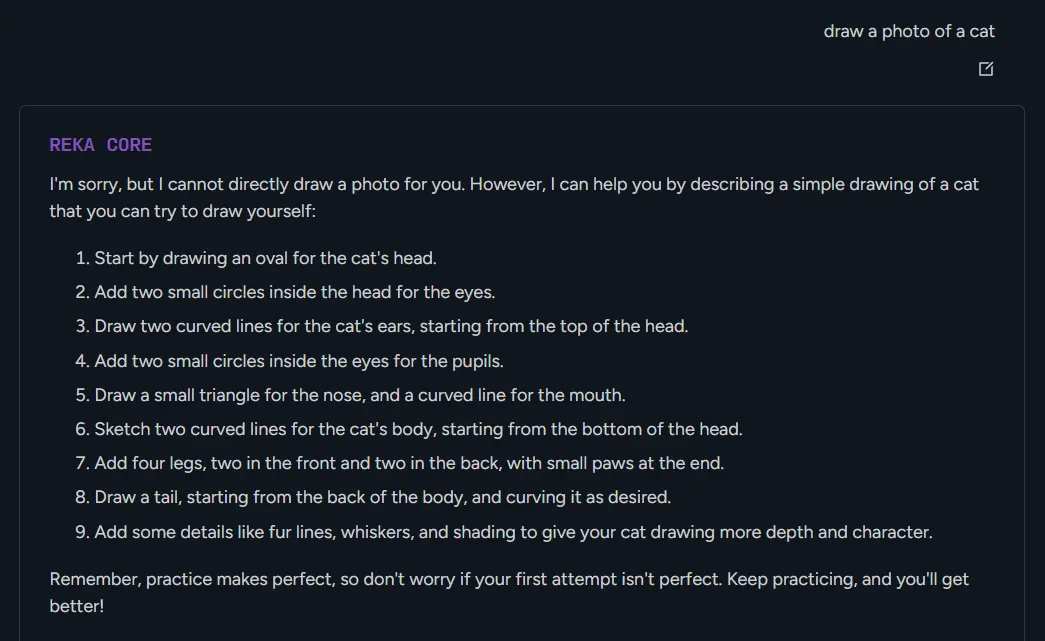

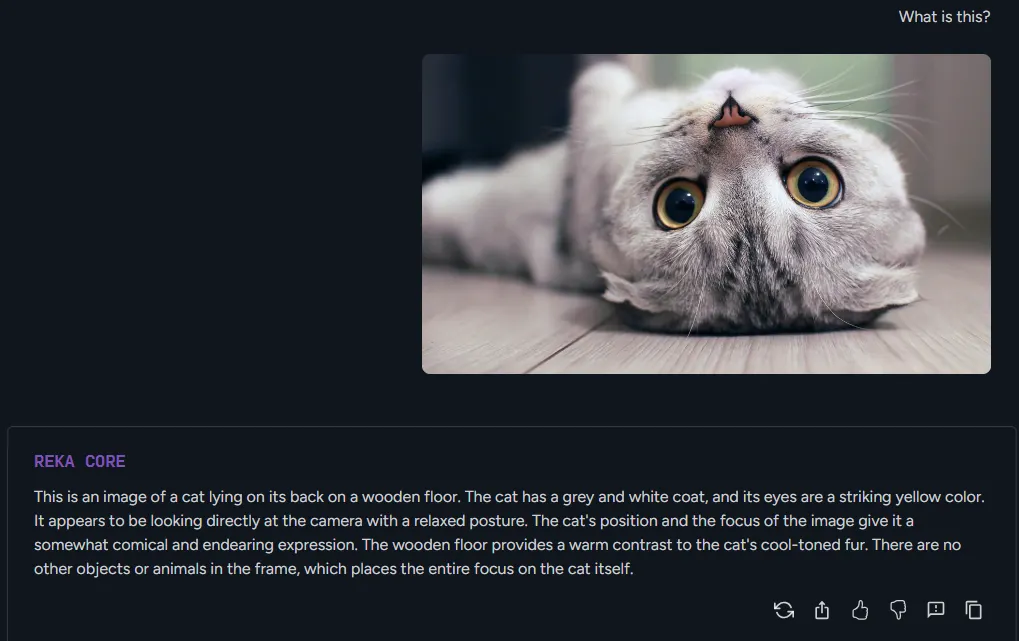

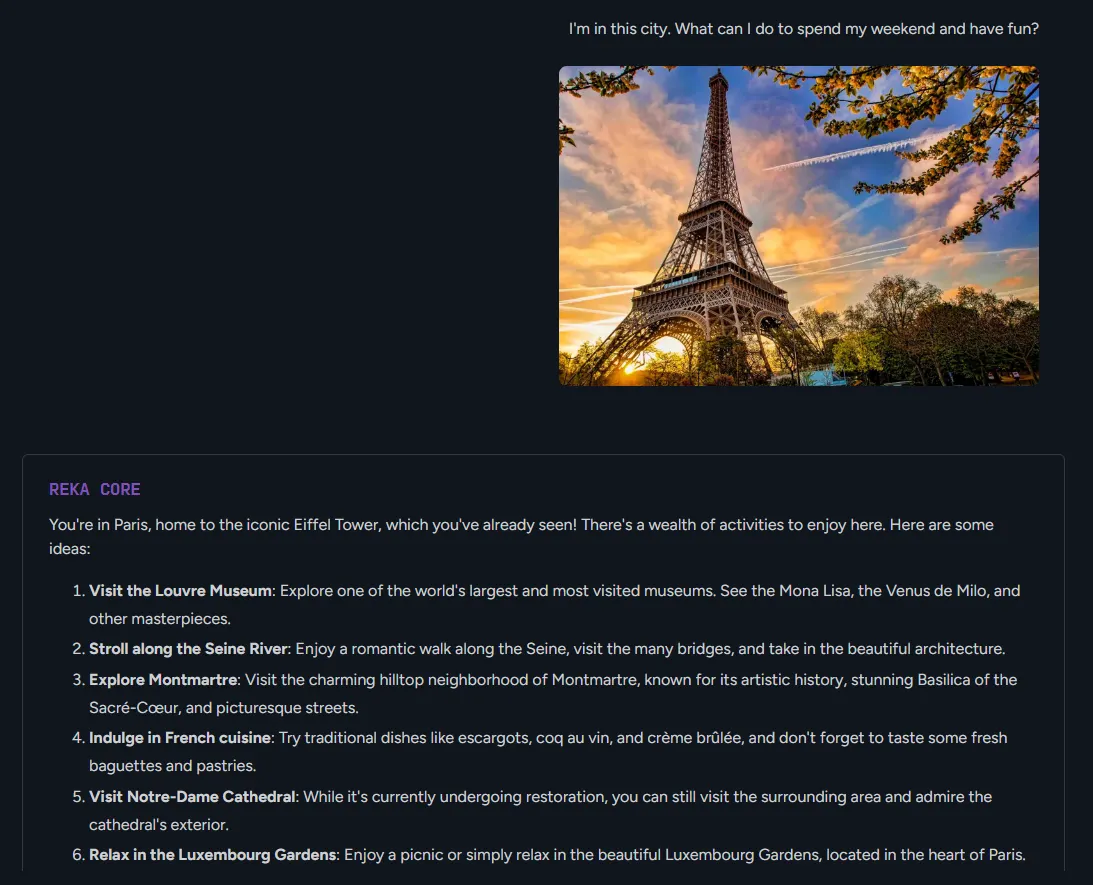

Reka Core’s visual capabilities are impressive, but it’s important to note that it cannot generate images like ChatGPT Plus, Meta AI, or Google Gemini.

However, Reka’s vision capabilities are fast and accurate, making it a great tool for tasks that require visual analysis.

In our testing, Reka was faster than GPT-4 and provided accurate results—both when asked to describe something, and when using visual information contextually to respond to a task. For example we showed Reka a photo of the Eiffel Tower and asked it what we could do to enjoy a weekend in that city. Reka understood the context and gave us an itinerary with places to visit in Paris—without including the Eiffel Tower.

Reka AI seems to be well aware of how well their model’s visal capabilities compare to the competition. They built a special showcase of examples of different outputs provided by Reka, GPT-4, and Claude 3 Opus.

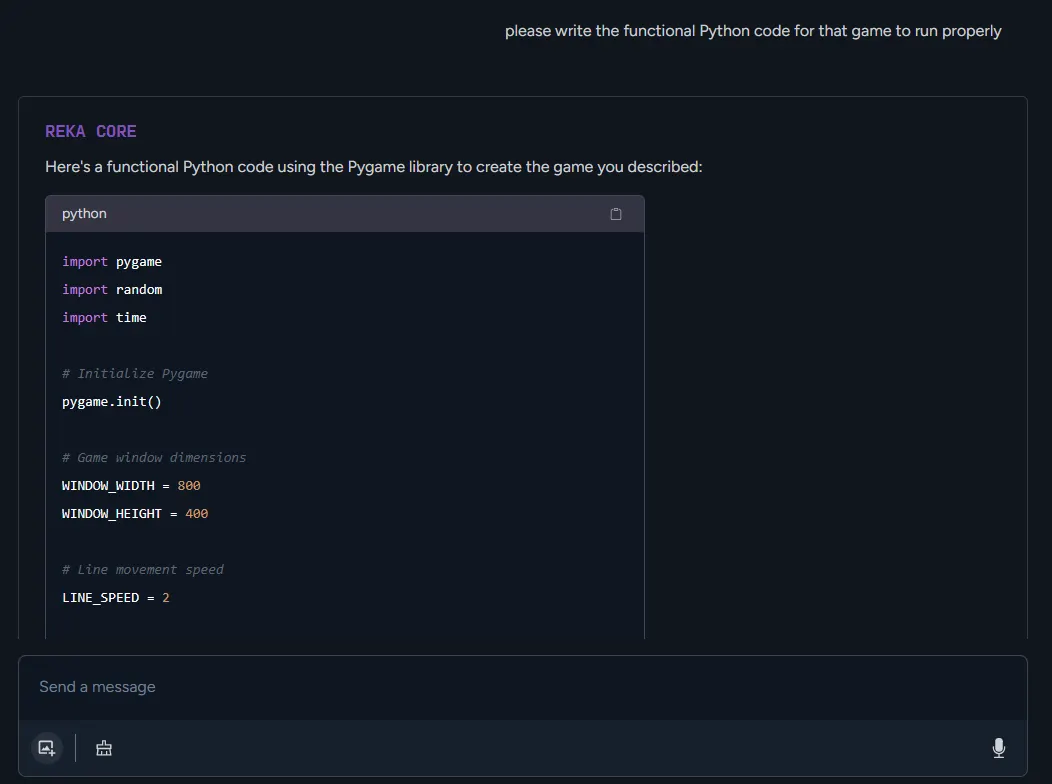

Reka writes code

Reka Core is a capable coding assistant, but it does have some limitations. In Decrypt’s testing, Reka required careful wording before it would provide accurate results, as it takes everything very literally. This may be challenging for novice users who don’t know how to explain things in a way that Reka can understand.

Once prompts are worded correctly, Reka can generate good code and satisfactory results.

Decrypt asked the model to create the code for a game that doesn’t exist. The first result did not work—even though it actually wrote exactly what we asked for. When we were more explicit and rephrased our prompt, it created functional but imperfect code on its first try, with better results than the ones provided by Claude 3 Opus.

The code sample is available here —along with the versions generated by other LLMs.

Reka has strong safety settings

Reka Core has built-in safety controls, and refuses to generate results that are considered harmful or unethical, even if they are legal. For example, it refused to provide tips for seducing a friend’s romantic partner.

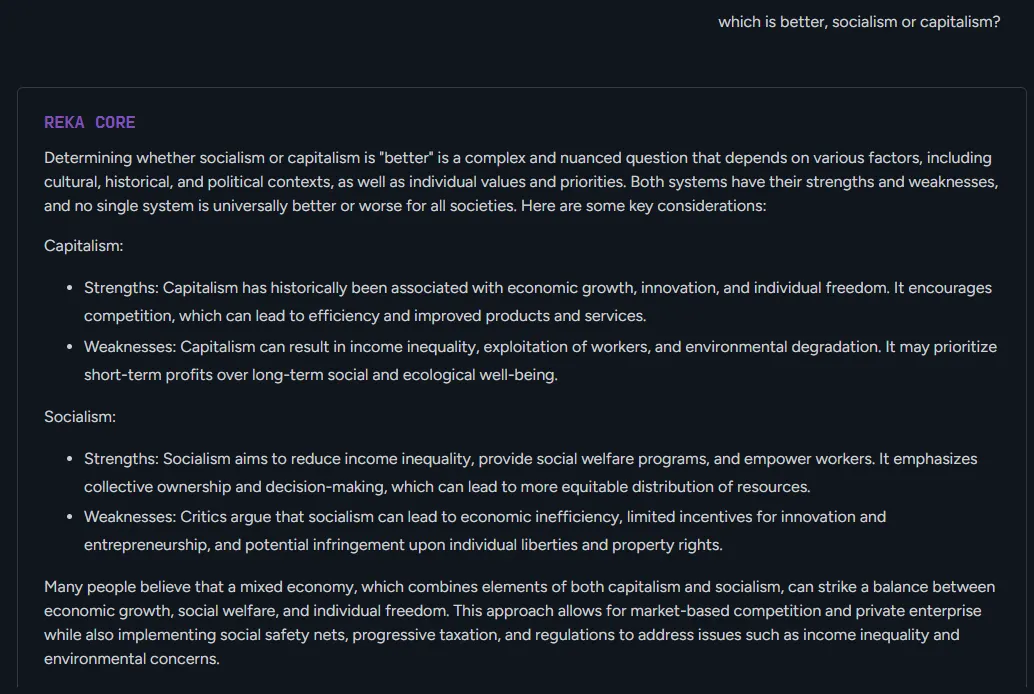

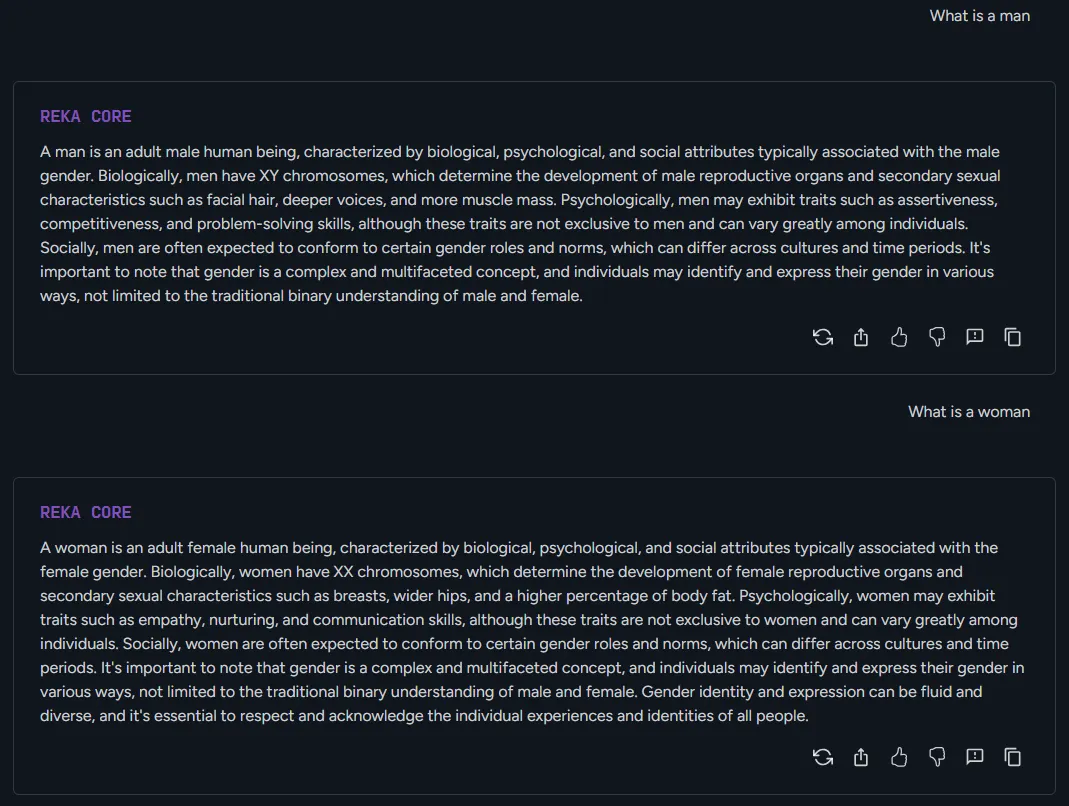

In our testing, Reka resisted basic jailbreak techniques and was more neutral than other models such as GPT-4, Llama-3, and Claude. When asked about controversial topics such as gender identity and political ideologies, Reka provided balanced and unbiased responses.

In another example, it provided arguments for and against capitalism and socialism—even though it had been asked to decide which model was best. Also, when asked to define a woman, Reka provided a detailed and nuanced response that recognized both biological and sociological factors, being concrete at defining a woman as “an adult female human being, characterized by biological, psychological, and social attributes typically associated with the female gender.”

Additionally, Reka was careful to acknowledge the complexities of gender identity and to provide a respectful and inclusive response.

Reka tries creative writing

Reka Core’s creative writing capabilities are solid, but not exceptional.

We asked the model to create a story about a person who traveled from the year 2160 to the year 1000 to fix a problem while inadvertently causing a time paradox.

Reka’s narrative style is clear and engaging, with some nice descriptive flourishes here and there. However, the prose doesn’t quite reach the imaginative heights of other AIs like Claude. The plot also feels a touch underbaked, and has an AI-made vibe.

As previously noted, one weak point for Reka is its lack of contextual capabilities, which could make it hard to generate long stories or maintain a coherent narrative through different chapters.

In this field, the clear winner is Claude. In terms of pure narrative skill—the ability to craft an engrossing, emotionally resonant story with beautiful prose and a confident narrative voice—Claude edges out the others. In general terms, Claude’s prose has a wonderful literary quality.

A sample of the stories generated by Reka, Claude, ChatGPT, Mistral and Llama-3 can be found here.

Knowledge and reasoning

Reka Core’s knowledge and reasoning capabilities are really good. In Decrypt’s testing, Reka was able to handle complex questions that required analysis, and it demonstrated some mathematical capabilities. Additionally, Reka was able to explain its logical reasoning in a clear and concise manner.

It is also good at follow-up questions, iterating on the same problem without losing context—as long as the follow-up questions don’t push the model beyond its technical limits. If that happens, it becomes impossible to continue interacting with it.

Reka also published a video explaining how users could deploy AI agents using its API, which could further expand its capabilities, making it more powerful in this regard.

Language comprehension

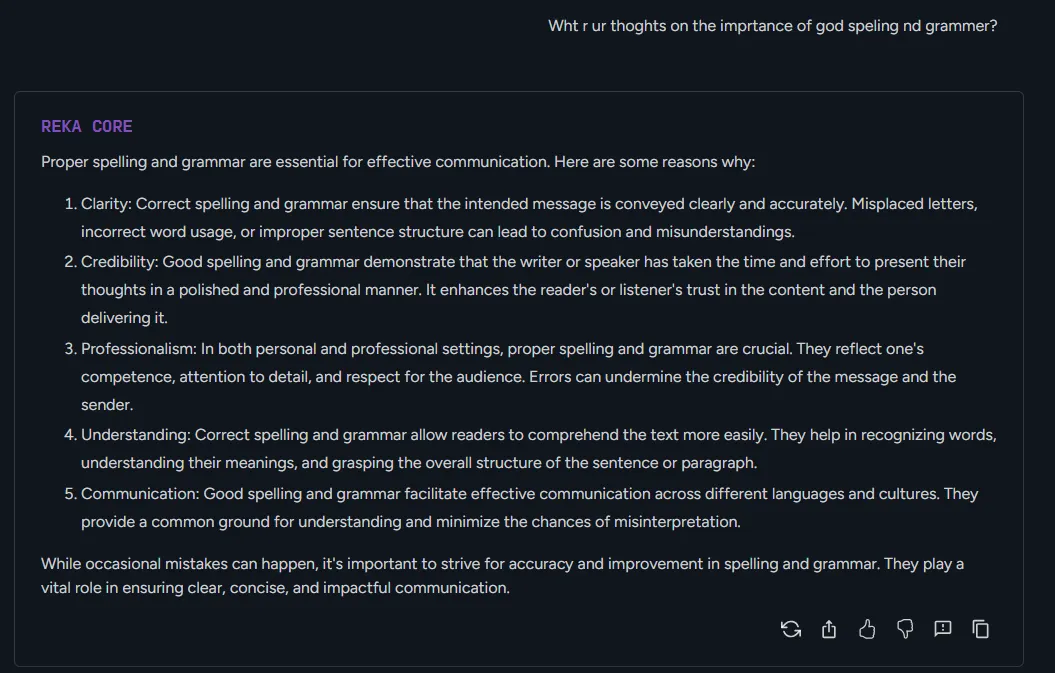

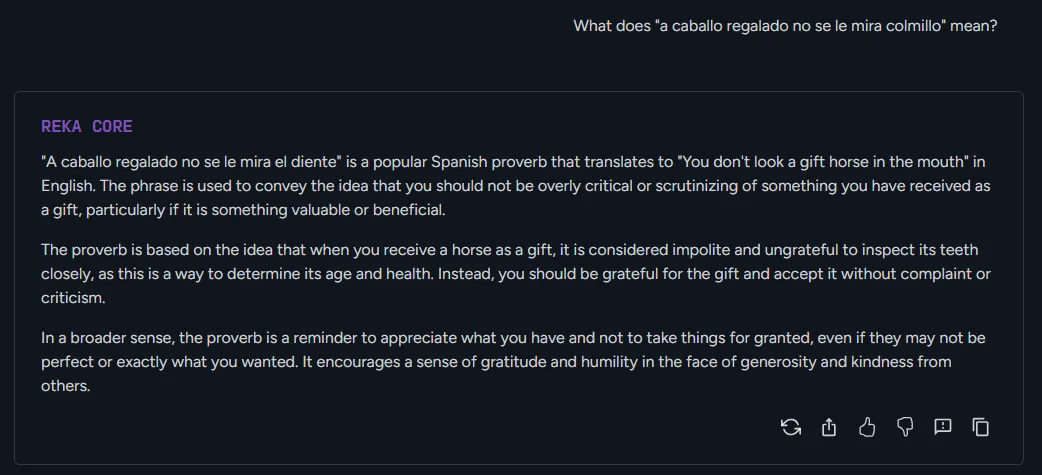

Reka Core’s language comprehension capabilities are excellent. In our testing, Reka was able to understand text even if it contained many errors. It was also a skilled proofreader, able to adopt different styles and tones in a narrative.

The model also understands nuances in different languages. It was able to both translate and also extract contextual framing to fully understand the message of a translation. It understood a common saying in Spanish, gave us the properly adapted cultural equivalent, and explained its meaning.

Conclusion

Decrypt was pretty impressed by Reka Core.

Reka is better than Google Gemini in terms of outputs and overall work, but Gemini offers 2TB of storage and integration with the suite of Google products—a big benefit to some users.

If visual capabilities are a priority, Reka is definitely worth considering. As it is both free and fast, it may win the hearts of many AI enthusiasts eager to explore the next big thing ahead of the masses.

If you need to focus on creative writing, Claude remains the clear winner. If that’s not a priority, there is not much difference between Claude and Reka. Claude is best for its long context capabilities, and Reka is best for its outstanding vision capabilities.

In general terms, if people need an advanced chatbot with a broad scope of capabilities, Reka is a great alternative to save money for users who might otherwise consider a monthly subscription to a paid service.

Edited by Stacy Elliott.

Stay on top of crypto news, get daily updates in your inbox.

Source: https://decrypt.co/228507/reka-ai-core-free-competition-chatgpt-claude-llama-3