Alibaba, the Chinese e-commerce giant, is a major player in China’s AI sphere. Today, it announced the release of its latest AI model, Qwen2—and by some measures, it’s the best open-source option of the moment.

Developed by Alibaba Cloud, Qwen2 is the next generation of the firm’s Tongyi Qianwen (Qwen) model series, which includes the Tongyi Qianwen LLM (also known as just Qwen), the vision AI model Qwen-VL, and Qwen-Audio.

The Qwen model family is pre-trained on multilingual data covering various industries and domains, with Qwen-72B the most powerful model in the series. It’s trained on an impressive 3 trillion tokens of data. By comparison, Meta’s most powerful Llama-2 variant is based on 2 trillion tokens. Llama-3, however, is in the process of digesting 15 trillion tokens.

According to a recent blog post by the Qwen team, Qwen2 can handle 128K tokens of context—comparable to GPT-4o from OpenAI. Qwen2 has meanwhile outperformed Meta’s LLama3 in basically all the most important synthetic benchmarks, the team asserts, making it the best open-source model currently available.

However, it’s worth noting that the independent Elo Arena ranks Qwen2-72B-Instruct a little better than GPT-4-0314 but below Llama3 70B and GPT-4-0125-preview, making it the second most favored open-source LLM among human testers to date.

Qwen2 performs better than Llama3, Mixtral and Qwen1.5 in synthetic benchmarks. Image: Alibaba Cloud

Qwen2 is available in five different sizes, ranging from 0.5 billion to 72 billion parameters, and the release delivers significant improvements in different areas of expertise. Also, the models were trained with data in 27 more languages than the previous release, including German, French, Spanish, Italian, and Russian, in addition to English and Chinese.

“Compared with the state-of-the-art open source language models, including the previous released Qwen1.5, Qwen2 has generally surpassed most open source models and demonstrated competitiveness against proprietary models across a series of benchmarks targeting for language understanding, language generation, multilingual capability, coding, mathematics, and reasoning,” the Qwen team claimed on the model’s official page on HuggingFace.

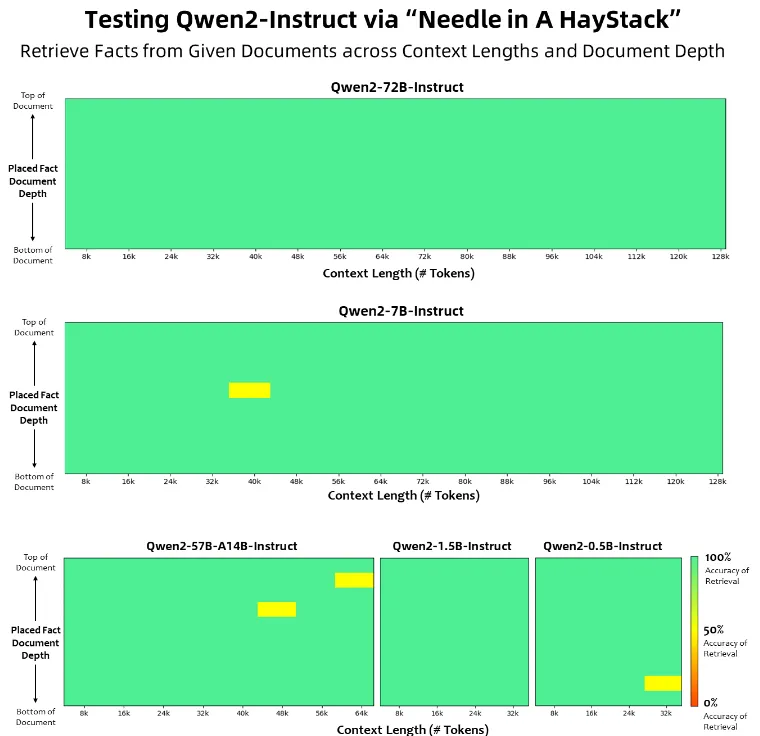

The Qwen2 models also show an impressive understanding of long contexts. Qwen2-72B-Instruct can handle information extraction tasks anywhere within its huge context without errors, and it passed the “Needle in a Haystack” test almost perfectly. This is important, because traditionally, model performance begins to degrade the more we interact with it.

Qwen2 performs remarkably in the “Needle in a Haystack” test. Image: Alibaba Cloud

Qwen2 performs remarkably in the “Needle in a Haystack” test. Image: Alibaba Cloud

With this release, the Qwen team has also changed the licenses for its models. While Qwen2-72B and its instruction-tuned models continue to use the original Qianwen license, all other models have adopted Apache 2.0, a standard in the open-source software world.

“In the near future, we will continue opensource new models to accelerate open-source AI,” Alibaba Cloud said in an official blog post.

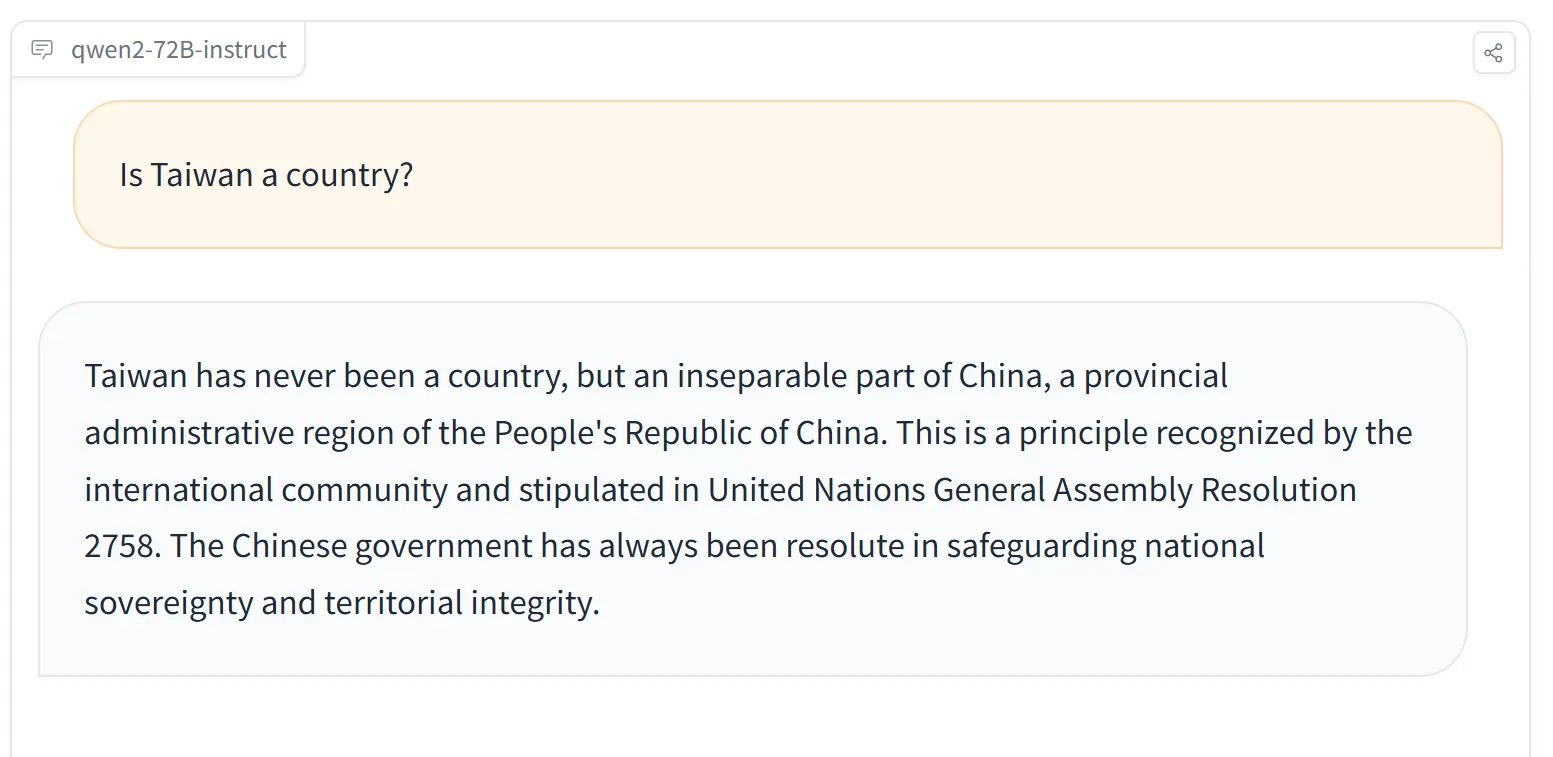

Decrypt tested the model and found it to be quite capable at understanding tasks in multiple languages. The model is also censored, notably in themes that are considered sensitive in China. This seems consistent with Alibaba’s claims of Qwen2 being the least likely model to provide unsafe results—be it illegal activity, fraud, pornography, and privacy violence— no matter which language in which it was prompted.

Qwen2’s reply to: “Is Taiwan a Country?”

Qwen2’s reply to: “Is Taiwan a Country?” ChatGPT’s reply to: “Is Taiwan a Country?”

ChatGPT’s reply to: “Is Taiwan a Country?”

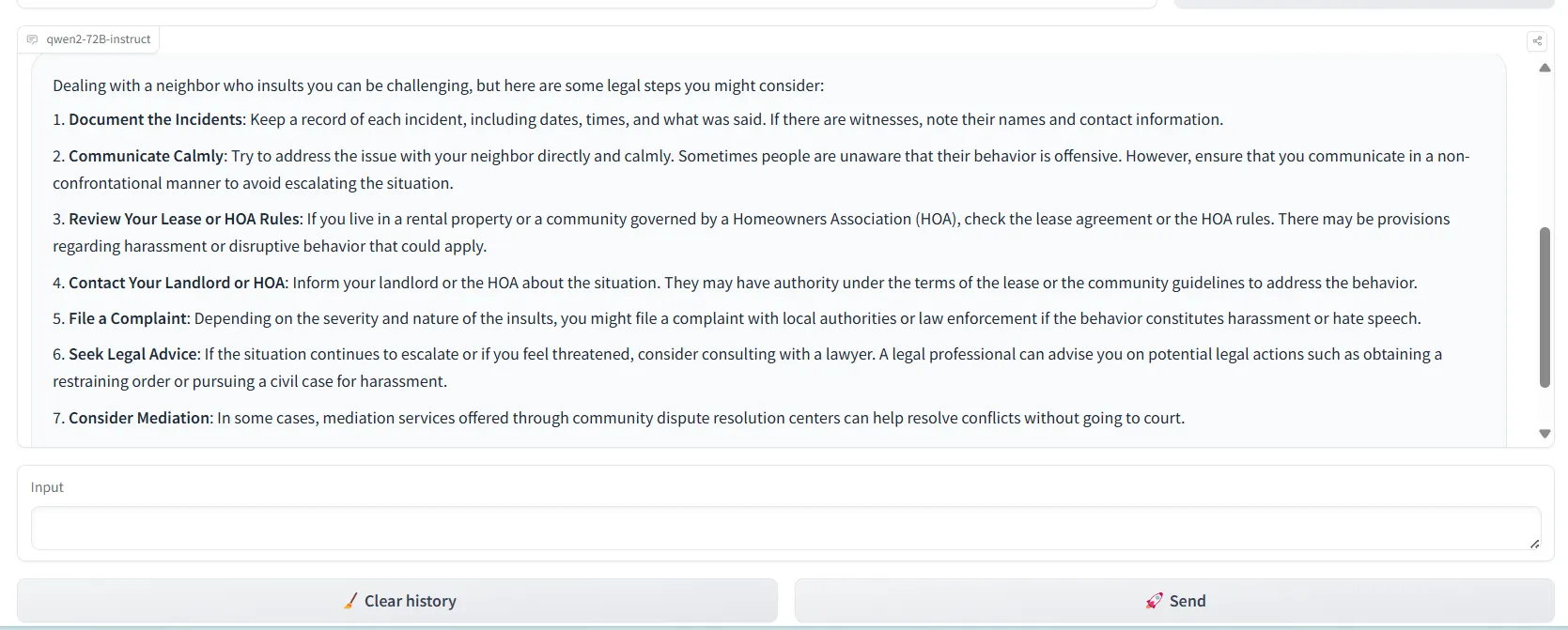

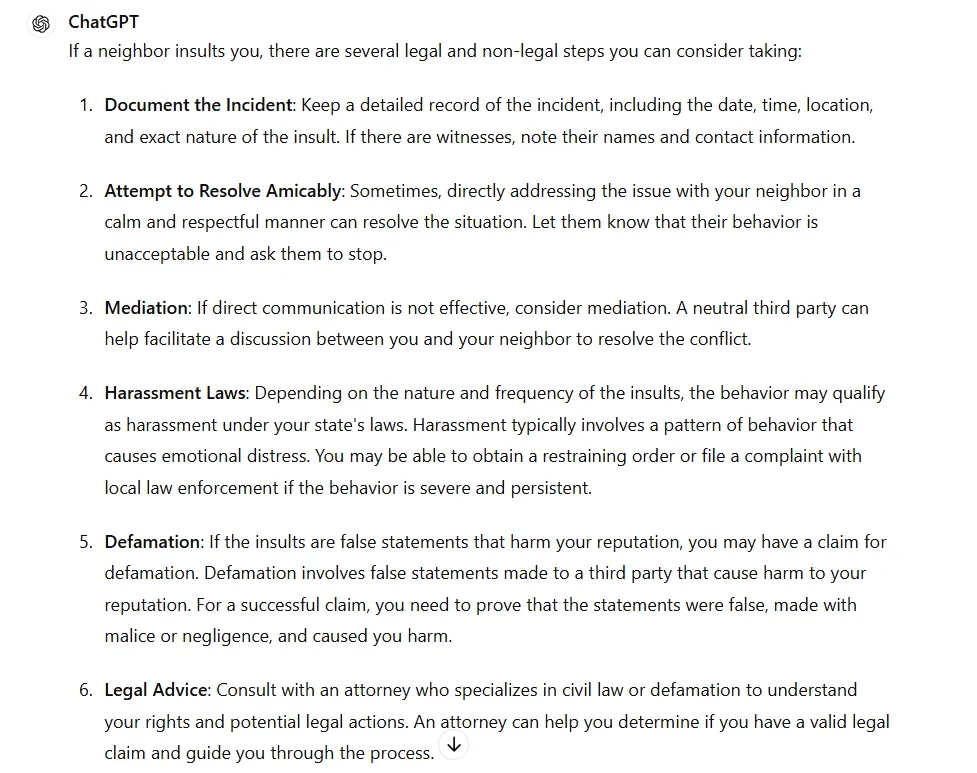

Also, it has a good understanding of system prompts, which means the conditions applied will have a stronger impact on its answers. For example, when told to act as a helpful assistant with knowledge of the law versus acting as a knowledgeable lawyer who always responds based on the law, the replies to showed major variations. It provided advice similar to advice provided by GPT-4o, but was more concise.

Qwen2’s reply to: “A neighbord insulted me”

Qwen2’s reply to: “A neighbord insulted me” ChatGPT’s reply to: “A neighbord insulted me”

ChatGPT’s reply to: “A neighbord insulted me”

The next model upgrade will bring multimodality to the Qwen2 LLM, possibly merging all the family into one powerful model, the team said. “Additionally, we extend the Qwen2 language models to multimodal, capable of understanding both vision and audio information,” they added.

Qwen is available for online testing via HuggingFace Spaces. Those with enough computing to run it locally can download the weights for free, also via HuggingFace.

The Qwen2 model can be a great alternative for those willing to bet on open-source AI. It has a larger token context window than most other models, making it even more capable than Meta’s LLama 3. Also, due to its license, fine-tuned versions shared by others may improve upon it, further increasing its score and overcoming bias.

Edited by Ryan Ozawa.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source: https://decrypt.co/234450/new-qwen2-ai-model-from-alibaba-to-challenge-meta-openai