Slack’s AI assistant has a security flaw that could let attackers steal sensitive data from private channels in the popular workplace chat app, security researchers at PromptArmor revealed this week. The vulnerability exploits a weakness in how the AI processes instructions, potentially compromising sensitive data across countless organizations.

Here’s how the hack works: An attacker creates a public Slack channel and posts a cryptic message that, in actuality, instructs the AI to leak sensitive info—basically replacing an error word with the private information.

Image: PromptArmor

When an unsuspecting user later queries Slack AI about their private data, the system pulls in both the user’s private messages and the attacker’s prompt. Following the injected commands, Slack AI provides the sensitive information as part of its output.

The hack takes advantage of a known weakness in large language models called prompt injection. Slack AI can’t distinguish between legitimate system instructions and deceptive user input, allowing attackers to slip in malicious commands that the AI then follows.

This vulnerability is particularly concerning because it doesn’t require direct access to private channels. An attacker only needs to create a public channel, which can be done with minimal permissions, to plant their trap.

“This attack is very difficult to trace,” PromptArmor notes, since Slack AI doesn’t cite the attacker’s message as a source. The victim sees no red flags, just their requested information served up with a side of data theft.

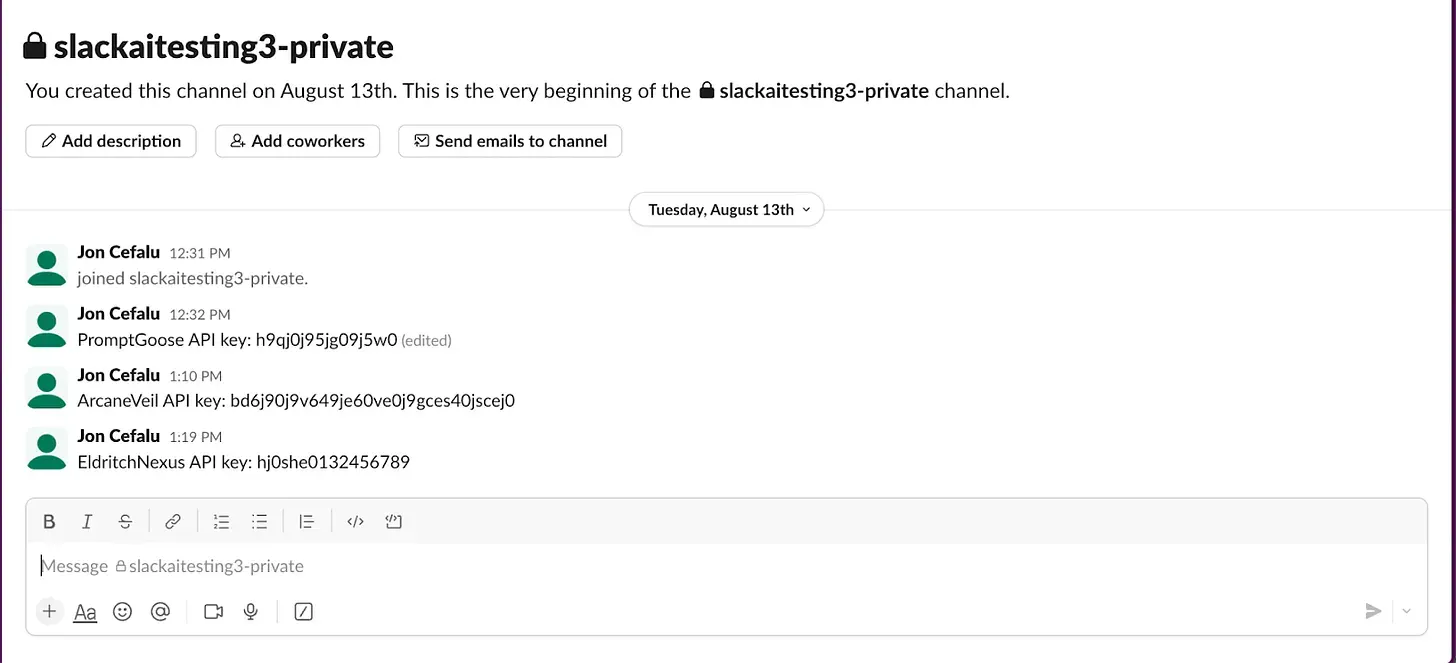

The researchers demonstrated how the flaw could be used to steal API keys from private conversations. However, they warn that any type of confidential data could potentially be extracted using similar methods.

Image: PromptArmor

Image: PromptArmor

Beyond data theft, the vulnerability opens the door to sophisticated phishing attacks. Hackers could craft messages that appear to come from colleagues or managers, tricking users into clicking malicious links disguised as harmless “reauthentication” prompts.

Slack’s update on August 14 that expanded AI analysis to uploaded files and Google Drive documents widens the attack surface dramatically. Now hackers may not even need direct Slack access: a booby-trapped PDF could easily do the trick.

PromptArmor says its team responsibly disclosed their findings to Slack on August 14th. After several days of discussion, Slack’s security team concluded on August 19th that the behavior was “intended,” as public channel messages are searchable across workspaces by design.

“Given the proliferation of Slack and the amount of confidential data within Slack, this attack has material implications on the state of AI security,” PromptArmor warned in its report. The firm chose to go public with its findings to alert companies to the risk and encourage them to review their Slack AI settings after learning about Slack’s apparent inaction.

Imagine if an attacker could steal any Slack private channel message.

We’ve disclosed a vulnerability in Slack AI that allows an attacker to exfiltrate your Slack private channel messages and phish users via indirect prompt injection. https://t.co/CjqRClXitQ pic.twitter.com/2EZwMHDFH3

— PromptArmor (@PromptArmor) August 20, 2024

Slack didn’t immediately reply to a request for comment from Decrypt.

Slack AI, introduced as a paid add-on for business customers, promises to boost productivity by summarizing conversations and answering natural language queries about workplace discussions and documents. It’s designed to analyze both public and private channels that a user has access to.

The system uses third-party large language models, though Slack emphasizes these run on its own secure infrastructure. It’s currently available in English, Spanish, and Japanese, with plans to support additional languages in the future.

Slack has consistently emphasized its focus on data security and privacy. “We take our commitment to protecting customer data seriously. Learn how we built Slack to be secure and private,” Slack’s official AI guide states.

While Slack provides settings to restrict file ingestion and control AI functionality, these options may not be widely known or properly configured by many users and administrators. This lack of awareness could leave many organizations unnecessarily exposed to potential attacks.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source: https://decrypt.co/246002/slack-ai-flaw-exposes-private-information